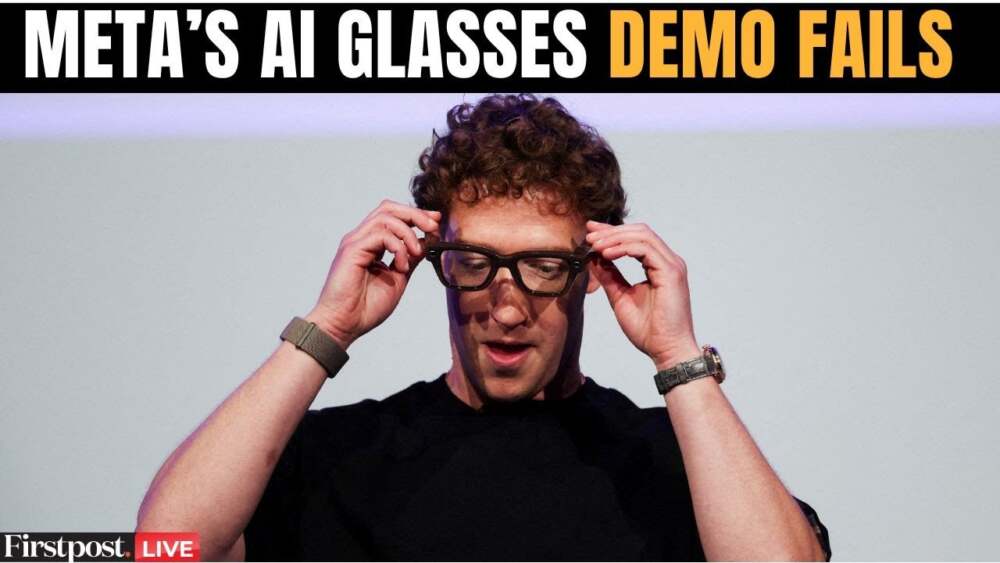

Meta’s much-anticipated showcase of its latest Ray-Ban smart glasses turned into a cautionary tale this week, as multiple live demonstrations malfunctioned in front of a large audience. The event, meant to highlight cutting-edge AI integration, instead exposed glaring technical fragility — a public reminder that brilliant concepts still need to prove reliability.

What Happened on Stage

During the live presentation, Meta CEO Mark Zuckerberg attempted to demonstrate features such as voice-activated “Live AI” assistance and WhatsApp video calling via the new Ray-Ban Display smart glasses paired with a neural wristband. But things quickly unraveled:

- A cooking demo was derailed when the AI assistant misinterpreted steps in a recipe. The smart glasses mistakenly claimed ingredients had already been combined—despite nothing being added.

- A voice command (“Hey Meta, start Live AI”) activated every single device that had “Live AI” mode enabled in the building, overwhelming the system.

- Zuckerberg later tried to answer an incoming video call using gesture controls with the neural wristband—it failed multiple times, with the interface not responding.

- There was an attempt to blame Wi-Fi issues during the event, though further explanation from Meta’s technical leadership pointed to deeper software and resource management problems.

The presenters tried to maintain composure. At one point Zuckerberg said, “This is, uh … it happens,” after yet another failed attempt. The audience, meanwhile, watched awkwardly as cues failed, features didn’t respond, and glitches turned what should have been a polished launch into a spectacle of technical misfires.

Why It Failed: Meta’s Explanation

Meta’s Chief Technology Officer, Andrew Bosworth, later broke down the core technical causes:

- Traffic Surge / Resource Misallocation

The command to start Live AI triggered every Ray-Ban Meta device in the auditorium. Meta had routed all that traffic to the same development server to isolate the demo environment—but the volume was far beyond what they had anticipated. The result? Server overload similar to what happens in a Distributed Denial of Service (DDoS) scenario. He admitted, “We DDoS’d ourselves.” - Bug in Display Wake-Up Logic (“Race Condition”)

The video call feature failed because when the call arrived, the glasses’ display had entered sleep mode. Upon waking, the notification to accept the call never appeared. Bosworth described this as a “never-before-seen bug,” arising from a “race condition” — a scenario where two or more processes interfere with each other because of unpredictable timing. - Mismatch Between Rehearsal and Reality

Bosworth noted that tests prior to the event had gone smoothly. The problem was that rehearsals had far fewer smart glasses active, far fewer people invoking Live AI simultaneously. The scale of live audience interaction simply overwhelmed the setup.

Meta emphasized that these issues were “demo fails,” not product fails — asserting that the software problems have been addressed, and that the hardware and baseline functionality remain solid. Bosworth insisted the underlying technology works as intended in controlled environments; the bugs and overloads were caught and fixed post-event.

Why This Matters

These kinds of public tech failures are instructive for several reasons:

- Proof vs Presentation: For tech companies, live demos are vital landmarks. They showcase what a product can do. But when the show stumbles, it erodes confidence, even if the product itself isn’t faulty.

- Expectations Are Elevated: Meta has invested heavily in AI, wearables, and augmented reality. The narrative around “smart glasses as the future” has high stakes. Failures at this scale are not just embarrassing—they can affect brand reputation in a competitive field.

- User Experience is Not a Lab Test: Real users interact with products in uncontrolled, messy contexts. Connectivity may vary; many devices may be online in one place; people will talk to them simultaneously. Meta’s glitch exposed how “real world” demand patterns can stress systems differently than internal tests.

The Product Side: What We Know

Despite the demo issues, the new Ray-Ban smart glasses offer a suite of impressive features that suggest potential:

- The Ray-Ban Display version includes a small screen embedded in a lens, allowing for overlaying notifications, captions, or contextual info.

- Gesture control via a neural wristband adds a novel input modality: instead of touch or voice, users can use wrist motions to send messages or interact with the interface.

- Other models in the line-up focus on improved battery life, better cameras, and rugged design for outdoor or sport usage.

What’s Next for Meta

- Fixes & Software Updates: The immediate focus is on patching the software bugs, improving server capacity, and making sure the features that failed on stage work seamlessly in larger group settings.

- User Feedback & Real-World Trials: Reviewers who tested pre-release units reportedly noticed promise. Meta will need to lean into early adopter feedback and field testing to refine both hardware and AI logic.

- Marketing vs Reality: Messaging will be crucial. Meta must balance excitement about future potential with transparency around current limitations. Overpromising can lead to backlash if users feel they were sold something not ready.

- Competition Pressure: Other tech giants are also pushing into wearable AI and AR. Meta’s missteps give them breathing room to gain ground, though Meta’s brand and resources give it advantages too.

The Takeaway

Meta’s smart glasses demo may have turned into a headline generator—not for innovation, but for technical embarrassment. But in context, the failures, while uncomfortable, reveal more about the challenge of scaling “smart, always-listening” devices than they do about Meta’s capacity as a whole.

The device features show real promise, but considerations around reliability, server infrastructure, AI accuracy, and user expectations remain central. If Meta can deliver stable performance and a polished, reliable user experience, these glasses may still shape the future of wearable tech. Until then, the demo will serve as a cautionary example of how high the bar has become for live tech reveals.

Leave a Reply