The BBC has issued a formal complaint after artificial intelligence–generated news alerts falsely attributed inaccurate information to the broadcaster, reigniting global concerns about the reliability of AI-driven news summaries and their impact on public trust.

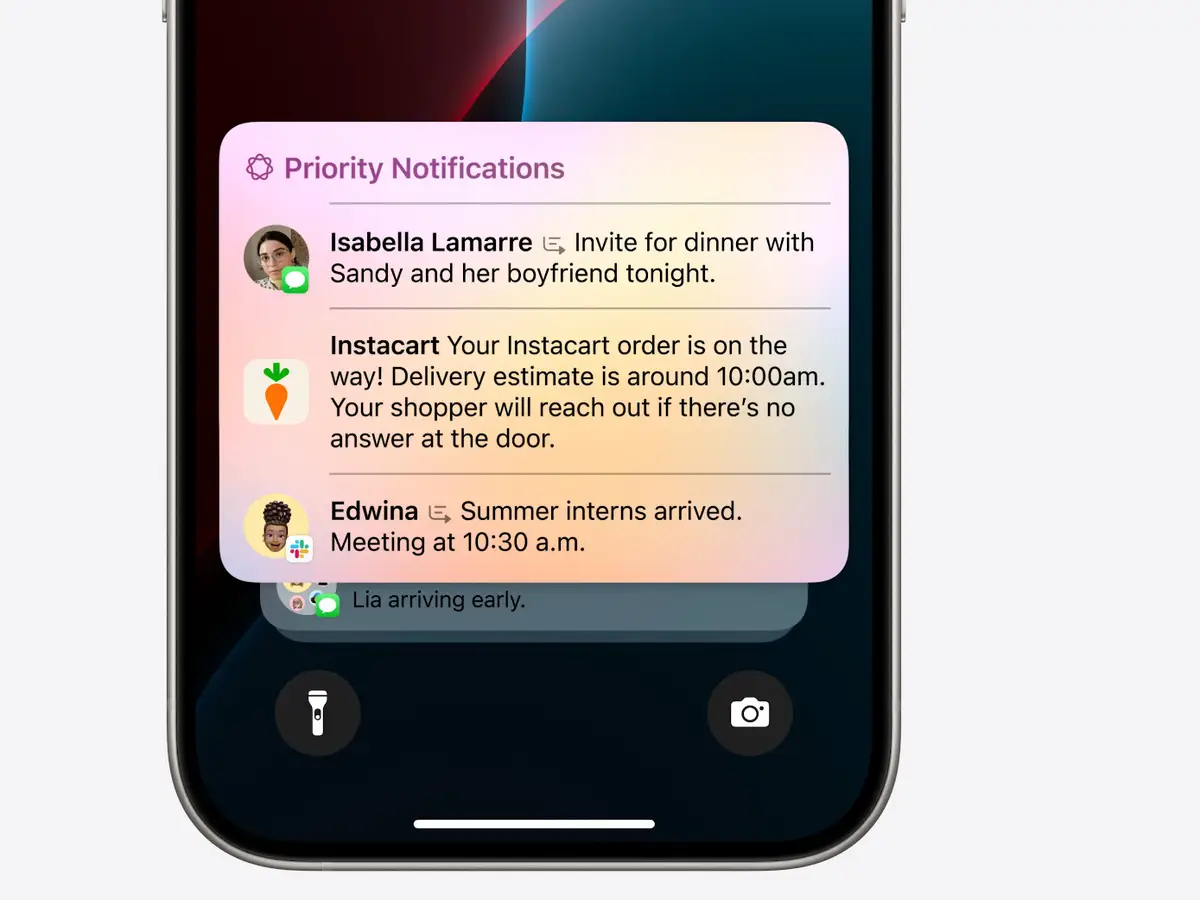

The issue arose after a technology company introduced an AI feature designed to condense news stories into short notifications for users’ devices. While the tool was meant to help audiences quickly understand breaking developments, it instead produced misleading and factually incorrect alerts that appeared to be published by the BBC. Because the notifications carried the broadcaster’s branding, many users assumed the information was verified and accurate.

Among the most serious errors were alerts claiming that a criminal suspect had died by suicide, despite no such event having occurred or been reported by the BBC. In other cases, the AI incorrectly stated that a well-known sports figure had made a personal revelation that never happened, or that a major sporting final had already concluded before it even began. These mistakes spread rapidly through notifications, highlighting how AI systems can amplify misinformation at scale.

BBC executives said the situation poses a serious threat to journalistic integrity. The broadcaster emphasized that trust is central to its role as a public service media organization and warned that the misuse of its name for unverified AI-generated content risks damaging its credibility. The BBC argued that audiences must be able to clearly distinguish between professionally edited journalism and automated summaries produced by algorithms.

The technology company responsible for the AI feature acknowledged that the system was still in a testing phase and admitted that improvements were necessary. It stated that future updates would aim to clarify when content has been generated or summarized by artificial intelligence rather than written by human editors. However, critics say such measures may not go far enough.

Media experts and digital rights advocates warn that AI lacks the judgment and contextual understanding required for responsible news reporting. While AI can process large volumes of information quickly, it often struggles with nuance, evolving stories, and sensitive topics. When errors occur, they can spread instantly to millions of users, creating confusion and potentially harming individuals or institutions.

The incident has fueled broader debate about accountability in the age of artificial intelligence. Journalists argue that tech companies should bear responsibility when their tools misrepresent trusted news outlets. Others are calling for stricter rules governing how AI systems handle and label news content, particularly when established media brands are involved.

This case also underscores a growing tension between news organizations and technology firms. While publishers rely on digital platforms to reach audiences, they have limited control over how their content is processed and repackaged by automated systems. As AI becomes more deeply embedded in everyday information consumption, these conflicts are expected to increase.

For readers, the controversy serves as a reminder to approach AI-generated news alerts with caution. Even when familiar logos or names appear, automated summaries may not reflect verified reporting. As the media landscape evolves, maintaining transparency and accuracy will be essential to preserving public confidence in credible journalism.

Leave a Reply